At the 2025 AI for Good Global Summit, our Executive Director, Danna Ingleton, participated in a panel at the “AI for Human Rights: Smarter, Faster, Fairer Monitoring” workshop. AI is reshaping how human rights data is documented, organised, and analysed, but its use raises urgent ethical questions. These reflections stem from that conversation.

Note: My speaking notes and the contents of this blog post are a reflection of the collective knowledge of our organisation.

A Transformative Moment

We are at a turning point. Artificial Intelligence (AI) and Machine Learning (ML) are reshaping not just the general nature of human rights work but the specifics of how we collect, analyse, and act on human rights information. But this transformation comes with deep ethical questions that cannot be ignored. One of my panel-mates asked us to reflect on the most important question inherent to ‘AI for Good’, which is:

Is all AI antithetical to human rights?

This is a question that continues to haunt me, as it should. If we rush forward uncritically, we risk undermining the very values we claim to protect.

At HURIDOCS, our mission is clear: to ensure that defenders and organisations around the world can collect, preserve, and share human rights information securely and effectively. We support data flows that connect civil society, the United Nations, governments, and other stakeholders working toward justice.

Over the years, our work has grown beyond documentation practices to include building open-source technologies rooted in those same methodologies. Our flagship platform, Uwazi, is used by over 400 NGOs and advocacy projects globally to manage and analyse human rights data.

In recent years, we’ve begun building ML tools within Uwazi—carefully, deliberately, and always in service of the communities we support. This is what brought me to the AI for Good Summit: to share what we’ve learned about the potentials, tensions, and ethical imperatives of using AI in human rights documentation, and how we still need to learn how to do it even better.

Reflections from our session

Some key themes and provocations from our session stood out:

1. Rethinking Innovation: Knowing When Not to Use AI

Sometimes, the smartest move is to step back. AI should never be implemented just to “keep up” or chase funding. We must ask: Does this tool serve the mission, or distract from it?

2. Enhanced Analytical Capacity: But at What Cost?

AI can process immense datasets of social media data, satellite imagery, testimony archives and more faster than humans ever could. But speed does not guarantee ethical accuracy or true representation. Biases in training data can amplify harm. We must pair AI’s power with human diligence, context, experience and voice.

3. Documentation at Scale: Access vs. Privacy

ML tools make it easier to extract insights from large volumes of human rights data. But much of that data is sensitive and personal. Without explicit consent, we risk violating the dignity and safety of those whose stories we seek to amplify.

4. Truth vs. Deepfakes: Preserving Evidence Integrity

AI can authenticate documents, but it can also create convincing forgeries. We must invest in evidence validation protocols and remain alert to how technology might be weaponised against human rights defenders.

5. Empowering Local Actors: Avoiding New Dependencies

AI has democratised access to advanced tools, enabling local actors to document abuses at scale. Yet dependency on proprietary systems threatens data sovereignty and long-term autonomy. Our collective goal needs to be community-led design and open-source technology that local defenders own and control.

6. Public Oversight: Demanding Transparency and Accountability

Many AI systems are opaque, built by private companies without public oversight.

As human rights actors, we must demand answers around tough questions about how models are trained, embedded bias and the environmental and human costs.

Efficiency alone cannot justify opaque or harmful tools.

An Invitation

To those working at the intersection of human rights and technology: bring your insights, your scepticism, and your lived experience into these conversations.

Let’s co-create technologies that are not only innovative but grounded in justice, dignity, and community wisdom.

And let us not forget the environmental cost of AI. Are the human rights gains worth the ecological impact of the infrastructure needed to run large-scale models? This, too, is part of our ethical calculus.

AI holds promise for transforming human rights work, but only if we use it through a critical lens coupled with humility, transparency, and care. Let’s ensure AI remains a tool for human rights, not the other way around.

What We Do (and What We Don’t) at HURIDOCS

At HURIDOCS, we do not build ML tools for the sake of innovation, nor do we implement every AI feature in every Uwazi instance. Innovation, for us, also means knowing when not to use AI. We start with the needs of our partners, human rights defenders, researchers, and advocates and assess what will truly help.

That said, where ML does offer value, we embed it throughout the project cycle, particularly in the collection and organisation phases of human rights documentation.

Tools We’ve Developed

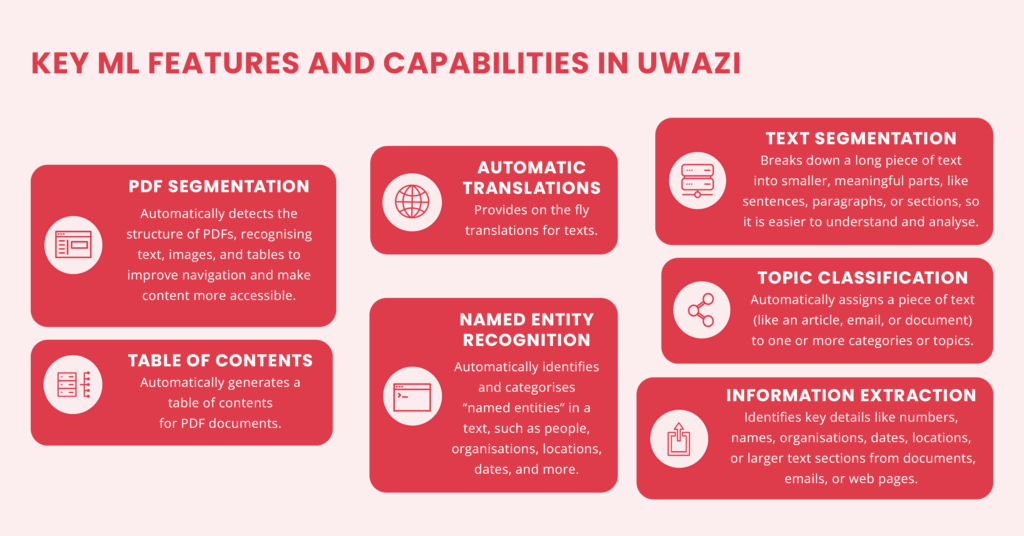

Here are some of the ML-powered features in Uwazi, developed based on real-world use cases:

- Crawlers

Collects data from multiple sources and automates the information-gathering process. - Automatic Translation

Currently supports over 22 languages using open-source models to reduce language barriers and broaden access. - Metadata Extraction

Uses machine learning to identify and pull out structured metadata from PDFs and documents, including names, dates, and topics. - Entity Extraction

Identifies key information like people, organisations, locations, and events within documents. Closely related to Named Entity Recognition (NER). - Paragraph Extraction

Extracts full paragraphs based on specific criteria, offering context that single keywords or phrases often miss. - Text Segmentation

Breaks documents into meaningful parts—like sentences, paragraphs, or sections—to simplify analysis. - Automatic Table of Contents

Generates structured overviews of documents for easier navigation and reference.

All of these tools aim to enhance, not replace, human expertise and, importantly, are built within an open-source environment.

Our Guiding Principles

At HURIDOCS, we approach ML development with a strong ethical foundation. Our principles include:

- Security and Privacy First

We protect sensitive data through high standards and regular audits. - Human-Centered Design

Humans remain in the loop at all times. Experts control how AI is used, not the other way around. - Open-Source Everything

Our models, code, and methodologies are public and transparent. - Purpose-Driven Innovation

Technology should amplify human rights work, not dictate it.

Discover how we balance innovation with integrity, and why we believe AI must serve human rights, not compromise them. Find out more about Uwazi and how HURIDOCS is supporting human rights documentation around the world.