What does responsible AI use look like in a human rights organisation? Especially for a human rights organisation that builds its own open-source tools that integrate machine learning features.

At HURIDOCS, we started answering that question not by chasing the latest tools or issuing sweeping bans, but by paying attention to what was already happening. Staff were already experimenting, sometimes tentatively, sometimes with critical curiosity. Rather than impose a top-down directive, we began by listening to our teams, their needs and uncertainties, and their broader concerns around AI. The policy we developed isn’t the final word but a starting point. One that reflects our values, makes room for difference, and helps us move with care, not just speed.

Starting where we are

The process of drafting HURIDOCS’ Internal AI Policy and Framework didn’t begin from a blank page or a technocratic impulse to keep up with the times. It began, as many good things do, by simply listening: to each other, to what was already happening, and to the quiet tensions rising between curiosity and caution.

We discovered that some of our colleagues were already testing out AI tools, while others were unsure or uneasy. We opened up space for informal, cross-team conversations. Over a series of working sessions, surveys, and shared documents, we mapped our existing habits, anxieties, and questions. What emerged was not just a policy but a developing practice. One that tries to meet people where they are while offering structure for where we might go.

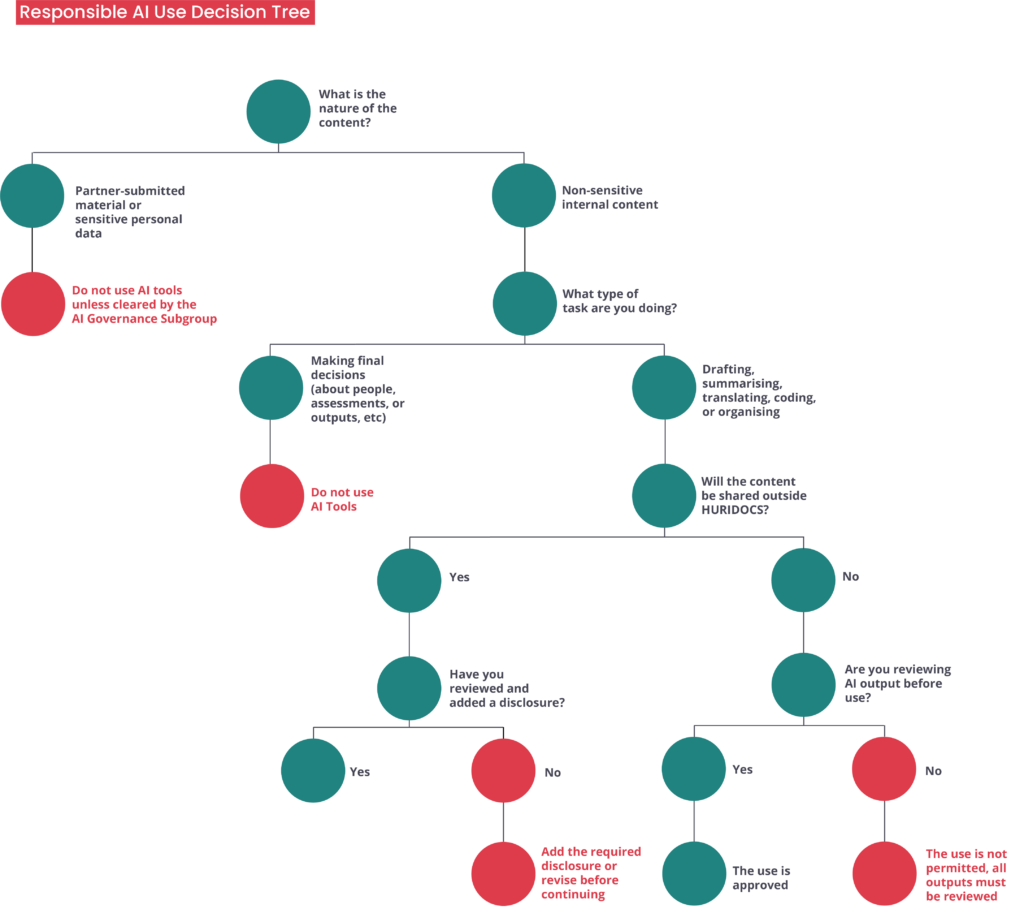

Crucially, we didn’t aim for perfection. We aimed for something usable. Something that could evolve with our needs and values. Alongside grounding principles, we are creating a flexible decision tree, a growing registry of tools, and an “AI Governance Subgroup” headed by a coordinator. AI governance defines strategy, policy, and standards to ensure safe, compliant AI use. Our subgroup’s role is not to develop, support, and adapt the governance mechanisms that are not a one-time output but a living framework meant to evolve with us.

Balancing Flexibility and Responsibility

One of the hardest challenges was balancing flexibility with accountability. Different teams engage with AI tools in different ways. Our Tech Team might explore code generation, while Programmes staff might use tools for translation, summarisation, or draft writing. What’s appropriate in one context might be risky in another.

Instead of trying to map every possible scenario, we focused on function-based guidance. What’s the task? What’s the content? Who’s the audience? A provisional decision tree emerged. If your content is sensitive, don’t use AI without clearance. If you’re coding or drafting, document your tool and add a disclosure. If you’re making decisions that directly affect people, such as hiring and performance reviews, don’t use these tools.

We’re not naïve about AI’s broader impact either. There are structural issues that no internal policy can fully solve. The environmental costs of training large models, the concentration of power in a handful of tech oligarchs, and the often-invisible labour that props up these systems are all issues we have to contend with. As a human rights organisation that also champions responsible tech, we can’t look away from them. Even if we don’t have all the answers, we’re committed to asking better questions and seeking solutions together.

What we learned (so far)

First: don’t start with the tech. Start with the people. While whitepapers offered useful framing, we took them further by grounding and grounding our policy through hallway conversations, informal chats, and real-world experimentation. Our policy was shaped less by whitepapers and more by ‘hallway’ conversations, chat threads, and the rough edges of real use. We didn’t try to anticipate everything. We just wanted to name what mattered—clarity, consent, care—and give people room to try, learn, and even mess up safely.

Second: not all AI use is created equal. Writing an email draft is not the same as analysing human rights data. So we designed for differentiated use. Shared principles, yes, but flexible enough to reflect real differences in risk and context.

And third: it’s okay not to have it all figured out. We’re finding our footing as we go but we’re doing it with intention and awareness. That means leaving room for doubt and disagreement. It means being okay with slow progress. The best kind of resistance to AI’s hype machine might just be a well-placed slowdown.

A policy that lets us move and refuse

We are making a policy that allows us to explore new directions without getting swept up in every shiny thing that comes our way or sweeping the issues that come with them under the rug. It enables us to stay curious and creative, but also empowers us to say “no thanks” when the hype doesn’t serve us.

We don’t want AI to rewire our mission. We want our mission to shape how we use AI. That means designing and iterating policy that makes room for pause, reflection, refusal, while curiously trying and iterating to explore AI’s purported claims for better efficiency and richer insights.

Read HURIDOCS AI Internal Policy and Governance below:

HURIDOCS-Internal-AI-Govenrance-Policy-and-FrameworkThis blogpost was drafted with support from ChatGPT for proofreading. The ideas are based on the organisation’s collective experiences that were processed, written, and finalised by members of the HURIDOCS DevComms team. AI assistance and disclosure are in accordance with HURIDOCS’ Internal AI Policy and Framework.